-

How many angels can dance on the head of a pin ?

- what a crazy question, it might be good for a song-title ( I was thinking something like "

Duran Duran

-

Like An Angel

"), but oh well, and how many sub particles can dance dance there ? and do we know the why of the distance between the proton and electron in a

hydrogen atom

?

It's might be and interesting set of questions, but it might be more interesting the reason why

Stephen Hawking

has warned in that way. He is a scientist, he has almost the QI of

Albert Einstein

, so he should know that artificial intelligence is not like the one described by

William Gibson

.

I mean in the Cyberpunk trilogy {

Down the Cyberspace, Count Zero, Monna Lisa Cyberpunk

}

William Gibson

has written several time that the Artificial Intelligence must have a gun pointed to the head, but oh well ... we have failed in A.I. in the last 50 years, we are far from the "

singularity-event

" and his friend

Roger Penrose

has written up to 4 books just to say we can't do A.I. without first improving math and physics, and we are also struggling on Why on the Why can't

Einstein

and

Quantum Mechanics

get along, and

Stephen Hawking

has contributed more on describing

black holes

, so ... I think before meeting a true

A.I. bot

, humans will understand the

graviton

and the

gravity

in order to build flying robots but without trashing so much energy in the ESC-technology (brashness motors + propeller, controlled by dedicated MPU with the purpose of being fast and accurate in their response) that we are currently using to make our quadri and esa rotors able to fly. These bots are cool, everyone wants to buy one, but see their autonomy, usually these bots can fly for 30-40 minutes, but then they need to land because their batteries are almost uncharged.

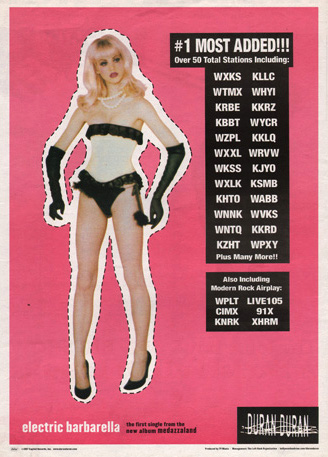

Electric barbarella

Electric barbarella

by

Duran Duran

BTW, if the A.I. will ever dress the robot mind, then I want see it dressed like

Electric Barbarella

(just to reinvent the definition of "

machine-porn

", currently that definition is applicable only with a vintage SGI under the desk)

MyLoft() <<

MyLoft() <<