hamei wrote:

Finally had a minute ...

ShadeOfBlue wrote:

This is partially true. It works great for IO-bound tasks, but for CPU-bound tasks it's different.

Been thinking about this a while and came to the conclusion that you have been misled, no, it is not different.

You're looking at this from the wrong point of view. I am only speaking of the situation

as it pertains to workstations

.

In the case of a workstation, the top priority is

user responsiveness.

. Not ultimate performance. Not least power consumption, not the best benchmarks on a single type of task; the overriding primary requirement is responsiveness to user input. I guess this point is somewhat lost on people who have only used Unix or Windows or OS X. But if you had ever used a system that truly prioritized the user, you would never be happy with the rest of this crap again.

Irix is not very good at this. I am quite disappointed in the performance of a dual processor O350. If you keep gr_osview open on the desktop you will see that one p is almost always more heavily loaded than the other. This is poor quality software. OS/2 does not do that. BeOS does not do that.

In practice, it does make a difference. With the Fuel, I would get situations when (for example) a swiped bit of text would not middle mouse down into nedit instantly. The processor was busy with something else. I was looking forward to going two-up ... but what do I find ? 2 p does the same thing. Irix is not well-designed (from a workstation point of view). This should never ever

ever

happen.

I

am god here and the damned computer better understand that.

Your example was also not so great for the following reason - a computer cannot spawn thirty threads simultaneously. If it spawns thirty threads, they will be spaced out at some time interval - let's say 3 milliseconds just for fun. During those three milliseconds a lot of other events will be occuring as well. And then the next thread, another three milliseconds. The rendering program does not own the entire computer, ever, and you can't spawn multiple threads simultaneously ever, either. So your example doesn't really hold true in practice.

But more to the point, it ignores the prime consideration for a workstation : PAY ATTENTION TO THE USER. If I am writing an email while some app is rendering a 3840x2400 graphic in the backround, I

really

don't give a shit if it takes .25 seconds longer or even 25 seconds longer. What I care about is that the edits in my email do not get hung up

even for 1/10th of a second.

What I am currently doing is what counts, not the stupid background render job.

[...]

Which brings me back to this project ... I am all for people writing software. Really. Even if it's something I could care less about, it's still good. Without software computers are not useful. And this is kind of a fun little utility, it could be nifty to know hw many cores are in a box or how many hyper-threaded pseudo-processors or whatever. But as a means to make multi-threaded software work better, it's tits on a boar. Developing more poorly-thought-out, badly coded untested crappy gnu libraries is the wrong approach to getting the most out of multiple processors. The programs have to be written with the task in mind, not just have some cool modern 'parallel library' tacked on to a junky badly-structured application. Junky single-threaded, junky multi-threaded, who cares ? Junk is junk.

Hi Hamei, I'm not saying that knowing the number of processors on a system is useful for the average application. I'm saying that it's one piece of information that may be necessary if someone is writing a special type of library or special application that uses multiprocessing. It's not for the typical program, but rather for a very CPU-intensive task that takes a long time to complete. Most people don't have these types of problems. Their programs take a second to complete, and are typically I/O bound, or network-bound, or something like that.

The reason for using all available processors would not be because the program would complete in 1 second instead of 2 seconds -- the idea is that it's something very significant, for which one processor is insufficient to get the job done in a reasonable amount of time. For example, a job that might take 90 minutes with one process can potentially complete in 30 minutes with four processes. Of course, not every concurrency problem can be resolved with simple approaches like this, but for some it's an eloquent solution. It's important to use the right tool for the job, and to know when it's necessary and not necessary to do optimization at this level.

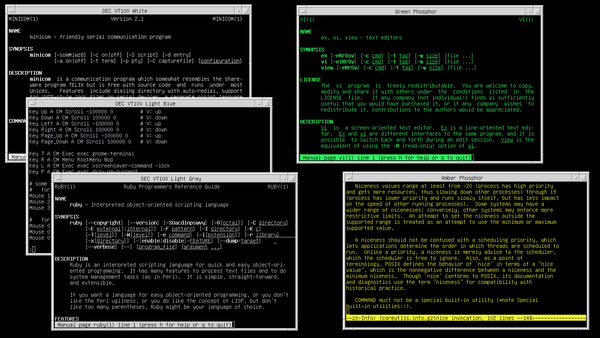

Without using multiprocessing, the machine would be idle, and my time would be wasted (to me, that's the truly bad user experience -- waiting around for an extra hour). As it is, even with the program pushing the CPU 4 ways to 100%, the system is still responsive. If there were a problem, I would just "nice" the program to a low scheduler priority. I've found, though, that the Linux scheduler seems to handle everything fine, and the only difference I see in my user experience is just the noise of the fans when they kick in. Basically, the processors are there to be used, not sit around idle, and the kernel's scheduler should decide how to divide CPU time between the processes.

In the case of IRIX, it sounds like the scheduler isn't being very fair about how much CPU time each process gets. In that case, the process scheduling algorithm is basically the problem. In the Linux 2.6 era, there was a scheduler rewrite to fix similar clashes between background processes and user applications:

http://en.wikipedia.org/wiki/Completely_Fair_Scheduler

. As an anecdote, it appears there was a big stink about it from some guy at Red Hat, to which Linus replied, "Numbers talk, bullshit walks. The numbers have been quoted. The clear interactive behavior has been seen."

Attachment:

htop.png [ 13.51 KiB | Viewed 145 times ]

htop.png [ 13.51 KiB | Viewed 145 times ]

As for the relative strengths and weaknesses of open source, there are good things and bad things. Certainly some development efforts like the Linux kernel, Perl, Python, Ruby, MySQL, Apache, etc. have been very successful. Some of it was famous and widely used before Linux was even around, like Perl, Emacs, the GNU toolchain, BSD Unix, etc. There's also a lot of crap code out there that is unprofessional, poorly conceived, and poorly tested. Taken as a whole, it's a big mixed bag, but that's the nature of having thousands of open development projects.

I do think it's much healthier than the era of 1990s commercial workstations, though, which were enjoyed by the few and privileged (while the vast majority were stuck on toy operating systems like DOS and Windows). For me, the earlier era of timesharing and community development at Bell Labs and UCB is like an ideal, and I think that open source projects are closer to that ideal than the approaches taken later at HP, Sun, IBM, etc.