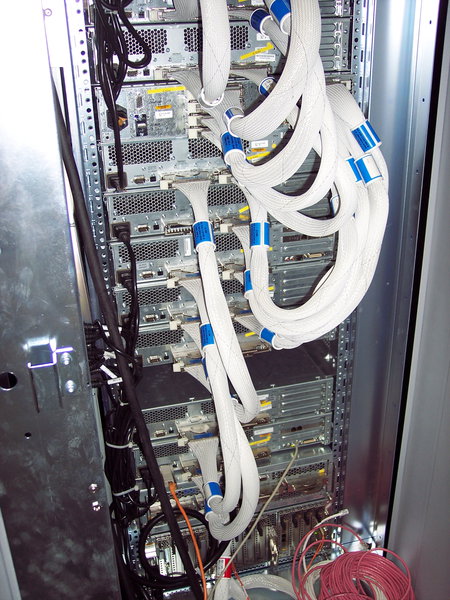

Once again, thanks to Voralyan's howto, here's the second Altix box on Debian 6. This one is a tad larger; there are 13 compute nodes, two of which have PCI slots and IOC10's (SATA drives instead of SCSI), and it's running with a single-plane router. The hwinfo and dmesg follow.

Code: Select all

winterstar:~# hwinfo --short

cpu:

Madison, 1500 MHz

Madison, 1500 MHz

Madison, 1500 MHz

Madison, 1500 MHz

Madison, 1500 MHz

Madison, 1500 MHz

Madison, 1500 MHz

Madison, 1500 MHz

Madison up to 9M cache, 1500 MHz

Madison up to 9M cache, 1500 MHz

Madison, 1500 MHz

Madison, 1500 MHz

Madison, 1500 MHz

Madison, 1500 MHz

Madison, 1500 MHz

Madison, 1500 MHz

Madison, 1500 MHz

Madison, 1500 MHz

Madison, 1500 MHz

Madison, 1500 MHz

Madison, 1500 MHz

Madison, 1500 MHz

Madison, 1500 MHz

Madison, 1500 MHz

Madison up to 9M cache, 1500 MHz

Madison up to 9M cache, 1500 MHz

keyboard:

/dev/ttyS0 serial console

storage:

SGI IOC4 I/O controller

Vitesse VSC7174 PCI/PCI-X Serial ATA Host Bus Controller

LSI Logic / Symbios Logic 53c1030 PCI-X Fusion-MPT Dual Ultra320 SCSI

LSI Logic / Symbios Logic 53c1030 PCI-X Fusion-MPT Dual Ultra320 SCSI

SGI IOC4 I/O controller

Vitesse VSC7174 PCI/PCI-X Serial ATA Host Bus Controller

network:

eth1 SGI Dual Port Gigabit Ethernet (PCI-X,Copper)

eth2 SGI Dual Port Gigabit Ethernet (PCI-X,Copper)

eth0 SGI IO9/IO10 Gigabit Ethernet (Copper)

eth4 SGI Gigabit Ethernet (Copper)

eth3 SGI IO9/IO10 Gigabit Ethernet (Copper)

SGI Cross Partition Network adapter

network interface:

lo Loopback network interface

eth2 Ethernet network interface

eth4 Ethernet network interface

eth3 Ethernet network interface

eth0 Ethernet network interface

eth1 Ethernet network interface

pan0 Ethernet network interface

disk:

/dev/sdb HDS722580VLSA80

/dev/sda HDS722580VLSA80

partition:

/dev/sdb1 Partition

/dev/sdb2 Partition

/dev/sdb3 Partition

/dev/sda1 Partition

/dev/sda2 Partition

/dev/sda3 Partition

cdrom:

/dev/hda MATSHITADVD-ROM SR-8177

/dev/hdc MATSHITADVD-ROM SR-8178

memory:

Main Memory

unknown:

Timer

PS/2 Controller

winterstar:~#

Code: Select all

winterstar:~# dmesg

[ 0.000000] Initializing cgroup subsys cpuset

[ 0.000000] Initializing cgroup subsys cpu

[ 0.000000] Linux version 2.6.32-5-mckinley (Debian 2.6.32-38) ([email protected]) (gcc version 4.3.5 (Debian 4.3.5-4) ) #1 SMP Mon Oct 3 06:04:14 UTC 2011

[ 0.000000] EFI v1.10 by INTEL: SALsystab=0x3002a09c90 ACPI 2.0=0x3002a09d80

[ 0.000000] booting generic kernel on platform sn2

[ 0.000000] console [sn_sal0] enabled

[ 0.000000] ACPI: RSDP 0000003002a09d80 00024 (v02 SGI)

[ 0.000000] ACPI: XSDT 0000003002a0e3d0 00044 (v01 SGI XSDTSN2 00010001 ? 0000008C)

[ 0.000000] ACPI: APIC 0000003002a0a5f0 00164 (v01 SGI APICSN2 00010001 ? 00000001)

[ 0.000000] ACPI: SRAT 0000003002a0a770 003D8 (v01 SGI SRATSN2 00010001 ? 00000001)

[ 0.000000] ACPI: SLIT 0000003002a0ab60 000D5 (v01 SGI SLITSN2 00010001 ? 00000001)

[ 0.000000] ACPI: FACP 0000003002a0aca0 000F4 (v03 SGI FACPSN2 00030001 ? 00000001)

[ 0.000000] ACPI Warning: 32/64X length mismatch in Pm1aEventBlock: 32/0 (20090903/tbfadt-526)

[ 0.000000] ACPI Warning: 32/64X length mismatch in Pm1aControlBlock: 16/0 (20090903/tbfadt-526)

[ 0.000000] ACPI Warning: 32/64X length mismatch in PmTimerBlock: 32/0 (20090903/tbfadt-526)

[ 0.000000] ACPI Warning: 32/64X length mismatch in Gpe0Block: 64/0 (20090903/tbfadt-526)

[ 0.000000] ACPI Warning: Invalid length for Pm1aEventBlock: 0, using default 32 (20090903/tbfadt-607)

[ 0.000000] ACPI Warning: Invalid length for Pm1aControlBlock: 0, using default 16 (20090903/tbfadt-607)

[ 0.000000] ACPI Warning: Invalid length for PmTimerBlock: 0, using default 32 (20090903/tbfadt-607)

[ 0.000000] ACPI: DSDT 0000003002a0d700 00024 (v02 SGI DSDTSN2 00020001 ? 000009A0)

[ 0.000000] ACPI: FACS 0000003002a0ac50 00040

[ 0.000000] ACPI: Local APIC address c0000000fee00000

[ 0.000000] Number of logical nodes in system = 13

[ 0.000000] Number of memory chunks in system = 13

[ 0.000000] SMP: Allowing 26 CPUs, 0 hotplug CPUs

[ 0.000000] Initial ramdisk at: 0xe0000630f526f000 (18309670 bytes)

[ 0.000000] SAL 3.2: SGI SN2 version 5.4

[ 0.000000] SAL Platform features: ITC_Drift

[ 0.000000] SAL: AP wakeup using external interrupt vector 0x12

[ 0.000000] ACPI: Local APIC address c0000000fee00000

[ 0.000000] register_intr: No IOSAPIC for GSI 52

[ 0.000000] 26 CPUs available, 26 CPUs total

[ 0.000000] Increasing MCA rendezvous timeout from 20000 to 49000 milliseconds

[ 0.000000] MCA related initialization done

[ 0.000000] ACPI: RSDP 0000003002a09d80 00024 (v02 SGI)

[ 0.000000] ACPI: XSDT 0000003002a0e3d0 0008C (v01 SGI XSDTSN2 00010001 ? 0000008C)

[ 0.000000] ACPI: APIC 0000003002a0a5f0 00164 (v01 SGI APICSN2 00010001 ? 00000001)

[ 0.000000] ACPI: SRAT 0000003002a0a770 003D8 (v01 SGI SRATSN2 00010001 ? 00000001)

[ 0.000000] ACPI: SLIT 0000003002a0ab60 000D5 (v01 SGI SLITSN2 00010001 ? 00000001)

[ 0.000000] ACPI: FACP 0000003002a0aca0 000F4 (v03 SGI FACPSN2 00030001 ? 00000001)

[ 0.000000] ACPI Warning: 32/64X length mismatch in Pm1aEventBlock: 32/0 (20090903/tbfadt-526)

[ 0.000000] ACPI Warning: 32/64X length mismatch in Pm1aControlBlock: 16/0 (20090903/tbfadt-526)

[ 0.000000] ACPI Warning: 32/64X length mismatch in PmTimerBlock: 32/0 (20090903/tbfadt-526)

[ 0.000000] ACPI Warning: 32/64X length mismatch in Gpe0Block: 64/0 (20090903/tbfadt-526)

[ 0.000000] ACPI Warning: Invalid length for Pm1aEventBlock: 0, using default 32 (20090903/tbfadt-607)

[ 0.000000] ACPI Warning: Invalid length for Pm1aControlBlock: 0, using default 16 (20090903/tbfadt-607)

[ 0.000000] ACPI Warning: Invalid length for PmTimerBlock: 0, using default 32 (20090903/tbfadt-607)

[ 0.000000] ACPI: DSDT 0000003002a0d700 009A0 (v02 SGI DSDTSN2 00020101 ? 000009A0)

[ 0.000000] ACPI: FACS 0000003002a0ac50 00040

[ 0.000000] ACPI: SSDT 0000003002a0cd70 00095 (v02 SGI SSDTSN2 00020101 ? 00000095)

[ 0.000000] ACPI: SSDT 0000003002a0ce80 00095 (v02 SGI SSDTSN2 00020101 ? 00000095)

[ 0.000000] ACPI: SSDT 0000003002a0e0b0 00095 (v02 SGI SSDTSN2 00020101 ? 00000095)

[ 0.000000] ACPI: SSDT 0000003002a0cf30 000F5 (v02 SGI SSDTSN2 00020101 ? 000000F5)

[ 0.000000] ACPI: SSDT 0000003002a0e160 00096 (v02 SGI SSDTSN2 00020101 ? 00000096)

[ 0.000000] ACPI: SSDT 0000003002a0d040 00096 (v02 SGI SSDTSN2 00020101 ? 00000096)

[ 0.000000] ACPI: SSDT 0000003002a0e210 00096 (v02 SGI SSDTSN2 00020101 ? 00000096)

[ 0.000000] ACPI: SSDT 0000003002a0d0f0 00096 (v02 SGI SSDTSN2 00020101 ? 00000096)

[ 0.000000] ACPI: SSDT 0000003002a0e2c0 000F7 (v02 SGI SSDTSN2 00020101 ? 000000F7)

[ 0.000000] SGI: Disabling VGA console

[ 0.000000] SGI SAL version 5.04

[ 0.000000] Virtual mem_map starts at 0xa0007ffa95200000

[ 0.000000] Zone PFN ranges:

[ 0.000000] DMA 0x00c00c00 -> 0x1000000000

[ 0.000000] Normal 0x1000000000 -> 0x1000000000

[ 0.000000] Movable zone start PFN for each node

[ 0.000000] early_node_map[16] active PFN ranges

[ 0.000000] 0: 0x00c00c00 -> 0x00c3e000

[ 0.000000] 1: 0x02c00c00 -> 0x02c1f000

[ 0.000000] 2: 0x04c00c00 -> 0x04c1f000

[ 0.000000] 3: 0x06c00c00 -> 0x06c1f000

[ 0.000000] 4: 0x08c00c00 -> 0x08c3e000

[ 0.000000] 4: 0x08d00000 -> 0x08d3dfff

[ 0.000000] 5: 0x0ac00c00 -> 0x0ac3e000

[ 0.000000] 6: 0x0cc00c00 -> 0x0cc3dfff

[ 0.000000] 7: 0x0ec00c00 -> 0x0ec3e000

[ 0.000000] 8: 0x10c00c00 -> 0x10c3e000

[ 0.000000] 9: 0x12c00c00 -> 0x12c3e000

[ 0.000000] 10: 0x14c00c00 -> 0x14c3e000

[ 0.000000] 11: 0x16c00c00 -> 0x16c1f000

[ 0.000000] 12: 0x18c00c00 -> 0x18c3d9ff

[ 0.000000] 12: 0x18c3de00 -> 0x18c3df54

[ 0.000000] 12: 0x18c3df65 -> 0x18c3df82

[ 0.000000] On node 0 totalpages: 250880

[ 0.000000] free_area_init_node: node 0, pgdat e000003003120000, node_mem_map a0007ffabf22a000

[ 0.000000] DMA zone: 858 pages used for memmap

[ 0.000000] DMA zone: 0 pages reserved

[ 0.000000] DMA zone: 250022 pages, LIFO batch:7

[ 0.000000] On node 1 totalpages: 123904

[ 0.000000] free_area_init_node: node 1, pgdat e00000b003030080, node_mem_map a0007ffb2f22a000

[ 0.000000] DMA zone: 424 pages used for memmap

[ 0.000000] DMA zone: 0 pages reserved

[ 0.000000] DMA zone: 123480 pages, LIFO batch:7

[ 0.000000] On node 2 totalpages: 123904

[ 0.000000] free_area_init_node: node 2, pgdat e000013003040100, node_mem_map a0007ffb9f22a000

[ 0.000000] DMA zone: 424 pages used for memmap

[ 0.000000] DMA zone: 0 pages reserved

[ 0.000000] DMA zone: 123480 pages, LIFO batch:7

[ 0.000000] On node 3 totalpages: 123904

[ 0.000000] free_area_init_node: node 3, pgdat e00001b003050180, node_mem_map a0007ffc0f22a000

[ 0.000000] DMA zone: 424 pages used for memmap

[ 0.000000] DMA zone: 0 pages reserved

[ 0.000000] DMA zone: 123480 pages, LIFO batch:7

[ 0.000000] On node 4 totalpages: 504831

[ 0.000000] free_area_init_node: node 4, pgdat e000023003060200, node_mem_map a0007ffc7f22a000

[ 0.000000] DMA zone: 4442 pages used for memmap

[ 0.000000] DMA zone: 0 pages reserved

[ 0.000000] DMA zone: 500389 pages, LIFO batch:7

[ 0.000000] On node 5 totalpages: 250880

[ 0.000000] free_area_init_node: node 5, pgdat e00002b003070280, node_mem_map a0007ffcef22a000

[ 0.000000] DMA zone: 858 pages used for memmap

[ 0.000000] DMA zone: 0 pages reserved

[ 0.000000] DMA zone: 250022 pages, LIFO batch:7

[ 0.000000] On node 6 totalpages: 250879

[ 0.000000] free_area_init_node: node 6, pgdat e000033003080300, node_mem_map a0007ffd5f22a000

[ 0.000000] DMA zone: 858 pages used for memmap

[ 0.000000] DMA zone: 0 pages reserved

[ 0.000000] DMA zone: 250021 pages, LIFO batch:7

[ 0.000000] On node 7 totalpages: 250880

[ 0.000000] free_area_init_node: node 7, pgdat e00003b003090380, node_mem_map a0007ffdcf22a000

[ 0.000000] DMA zone: 858 pages used for memmap

[ 0.000000] DMA zone: 0 pages reserved

[ 0.000000] DMA zone: 250022 pages, LIFO batch:7

[ 0.000000] On node 8 totalpages: 250880

[ 0.000000] free_area_init_node: node 8, pgdat e0000430030a0400, node_mem_map a0007ffe3f22a000

[ 0.000000] DMA zone: 858 pages used for memmap

[ 0.000000] DMA zone: 0 pages reserved

[ 0.000000] DMA zone: 250022 pages, LIFO batch:7

[ 0.000000] On node 9 totalpages: 250880

[ 0.000000] free_area_init_node: node 9, pgdat e00004b0030b0480, node_mem_map a0007ffeaf22a000

[ 0.000000] DMA zone: 858 pages used for memmap

[ 0.000000] DMA zone: 0 pages reserved

[ 0.000000] DMA zone: 250022 pages, LIFO batch:7

[ 0.000000] On node 10 totalpages: 250880

[ 0.000000] free_area_init_node: node 10, pgdat e0000530030c0500, node_mem_map a0007fff1f22a000

[ 0.000000] DMA zone: 858 pages used for memmap

[ 0.000000] DMA zone: 0 pages reserved

[ 0.000000] DMA zone: 250022 pages, LIFO batch:7

[ 0.000000] On node 11 totalpages: 123904

[ 0.000000] free_area_init_node: node 11, pgdat e00005b0030d0580, node_mem_map a0007fff8f22a000

[ 0.000000] DMA zone: 424 pages used for memmap

[ 0.000000] DMA zone: 0 pages reserved

[ 0.000000] DMA zone: 123480 pages, LIFO batch:7

[ 0.000000] On node 12 totalpages: 249712

[ 0.000000] free_area_init_node: node 12, pgdat e0000630030e0600, node_mem_map a0007fffff22a000

[ 0.000000] DMA zone: 858 pages used for memmap

[ 0.000000] DMA zone: 0 pages reserved

[ 0.000000] DMA zone: 248854 pages, LIFO batch:7

[ 0.000000] Built 13 zonelists in Node order, mobility grouping on. Total pages: 2993316

[ 0.000000] Policy zone: DMA

[ 0.000000] Kernel command line: BOOT_IMAGE=dev001:/EFI/debian/vmlinuz root=/dev/md1 ro

[ 0.000000] PID hash table entries: 4096 (order: 1, 32768 bytes)

[ 0.000000] Memory: 47902096k/48070944k available (7334k code, 198992k reserved, 3581k data, 736k init)

[ 0.000000] SLUB: Genslabs=16, HWalign=128, Order=0-3, MinObjects=0, CPUs=26, Nodes=256

[ 0.000000] Hierarchical RCU implementation.

[ 0.000000] NR_IRQS:1024

[ 0.000000] CPU 0: base freq=200.000MHz, ITC ratio=15/2, ITC freq=1500.000MHz

[ 0.000000] Console: colour dummy device 80x25

[ 0.000000] console [ttySG0] enabled

[ 0.016000] Calibrating delay loop... 2244.60 BogoMIPS (lpj=4489216)

[ 0.117070] Security Framework initialized

[ 0.117747] SELinux: Disabled at boot.

[ 0.127077] Dentry cache hash table entries: 8388608 (order: 12, 67108864 bytes)

[ 0.288337] Inode-cache hash table entries: 4194304 (order: 11, 33554432 bytes)

[ 0.362343] Mount-cache hash table entries: 1024

[ 0.365176] Initializing cgroup subsys ns

[ 0.365912] Initializing cgroup subsys cpuacct

[ 0.368068] Initializing cgroup subsys devices

[ 0.368741] Initializing cgroup subsys freezer

[ 0.388004] Initializing cgroup subsys net_cls

[ 0.389010] ACPI: Core revision 20090903

[ 0.392014] Boot processor id 0x0/0x0

[ 0.012000] Fixed BSP b0 value from CPU 1

[ 0.012000] CPU 1: base freq=200.000MHz, ITC ratio=15/2, ITC freq=1500.000MHz

[ 0.012000] CPU 2: base freq=200.000MHz, ITC ratio=15/2, ITC freq=1500.000MHz

[ 0.012000] CPU 3: base freq=200.000MHz, ITC ratio=15/2, ITC freq=1500.000MHz

[ 0.012000] CPU 4: base freq=200.000MHz, ITC ratio=15/2, ITC freq=1500.000MHz

[ 0.012000] CPU 5: base freq=200.000MHz, ITC ratio=15/2, ITC freq=1500.000MHz

[ 0.012000] CPU 6: base freq=200.000MHz, ITC ratio=15/2, ITC freq=1500.000MHz

[ 0.012000] CPU 7: base freq=200.000MHz, ITC ratio=15/2, ITC freq=1500.000MHz

[ 0.012000] CPU 8: base freq=200.000MHz, ITC ratio=15/2, ITC freq=1500.000MHz

[ 0.012000] CPU 9: base freq=200.000MHz, ITC ratio=15/2, ITC freq=1500.000MHz

[ 0.012000] CPU 10: base freq=200.000MHz, ITC ratio=15/2, ITC freq=1500.000MHz

[ 0.012000] CPU 11: base freq=200.000MHz, ITC ratio=15/2, ITC freq=1500.000MHz

[ 0.012000] CPU 12: base freq=200.000MHz, ITC ratio=15/2, ITC freq=1500.000MHz

[ 0.012000] CPU 13: base freq=200.000MHz, ITC ratio=15/2, ITC freq=1500.000MHz

[ 0.012000] CPU 14: base freq=200.000MHz, ITC ratio=15/2, ITC freq=1500.000MHz

[ 0.012000] CPU 15: base freq=200.000MHz, ITC ratio=15/2, ITC freq=1500.000MHz

[ 0.012000] CPU 16: base freq=200.000MHz, ITC ratio=15/2, ITC freq=1500.000MHz

[ 0.012000] CPU 17: base freq=200.000MHz, ITC ratio=15/2, ITC freq=1500.000MHz

[ 0.012000] CPU 18: base freq=200.000MHz, ITC ratio=15/2, ITC freq=1500.000MHz

[ 0.012000] CPU 19: base freq=200.000MHz, ITC ratio=15/2, ITC freq=1500.000MHz

[ 0.012000] CPU 20: base freq=200.000MHz, ITC ratio=15/2, ITC freq=1500.000MHz

[ 0.012000] CPU 21: base freq=200.000MHz, ITC ratio=15/2, ITC freq=1500.000MHz

[ 0.012000] CPU 22: base freq=200.000MHz, ITC ratio=15/2, ITC freq=1500.000MHz

[ 0.012000] CPU 23: base freq=200.000MHz, ITC ratio=15/2, ITC freq=1500.000MHz

[ 0.012000] CPU 24: base freq=200.000MHz, ITC ratio=15/2, ITC freq=1500.000MHz

[ 0.012000] CPU 25: base freq=200.000MHz, ITC ratio=15/2, ITC freq=1500.000MHz

[ 0.756984] Brought up 26 CPUs

[ 0.757435] Total of 26 processors activated (58359.80 BogoMIPS).

[ 0.764285] CPU0 attaching sched-domain:

[ 0.764290] domain 0: span 0-1 level CPU

[ 0.764294] groups: group a0000001009a4f70 cpus 0 group e000003003104f70 cpus 1

[ 0.764302] domain 1: span 0-25 level NODE

[ 0.764305] groups: group e0000030f07ac000 cpus 0-1 (cpu_power = 2048) group e0000030f07ac020 cpus 2-3 (cpu_power = 2048) group e0000030f07ac040 cpus 4-5 (cpu_power = 2048) group e0000030f07ac060 cpus 6-7 (cpu_power = 2048) group e0000030f07ac080 cpus 8-9 (cpu_power = 2048) group e0000030f07ac0a0 cpus 10-11 (cpu_power = 2048) group e0000030f07ac0c0 cpus 12-13 (cpu_power = 2048) group e0000030f07ac0e0 cpus 14-15 (cpu_power = 2048) group e0000030f07ac100 cpus 16-17 (cpu_power = 2048) group e0000030f07ac120 cpus 18-19 (cpu_power = 2048) group e0000030f07ac140 cpus 20-21 (cpu_power = 2048) group e0000030f07ac160 cpus 22-23 (cpu_power = 2048) group e0000030f07ac180 cpus 24-25 (cpu_power = 2048)

[ 0.764349] CPU1 attaching sched-domain:

[ 0.764352] domain 0: span 0-1 level CPU

[ 0.764355] groups: group e000003003104f70 cpus 1 group a0000001009a4f70 cpus 0

[ 0.764362] domain 1: span 0-25 level NODE

[ 0.764365] groups: group e0000030f07ac000 cpus 0-1 (cpu_power = 2048) group e0000030f07ac020 cpus 2-3 (cpu_power = 2048) group e0000030f07ac040 cpus 4-5 (cpu_power = 2048) group e0000030f07ac060 cpus 6-7 (cpu_power = 2048) group e0000030f07ac080 cpus 8-9 (cpu_power = 2048) group e0000030f07ac0a0 cpus 10-11 (cpu_power = 2048) group e0000030f07ac0c0 cpus 12-13 (cpu_power = 2048) group e0000030f07ac0e0 cpus 14-15 (cpu_power = 2048) group e0000030f07ac100 cpus 16-17 (cpu_power = 2048) group e0000030f07ac120 cpus 18-19 (cpu_power = 2048) group e0000030f07ac140 cpus 20-21 (cpu_power = 2048) group e0000030f07ac160 cpus 22-23 (cpu_power = 2048) group e0000030f07ac180 cpus 24-25 (cpu_power = 2048)

[ 0.764406] CPU2 attaching sched-domain:

[ 0.764409] domain 0: span 2-3 level CPU

[ 0.764412] groups: group e00000b003014f70 cpus 2 group e00000b003024f70 cpus 3

[ 0.764419] domain 1: span 0-25 level NODE

[ 0.764422] groups: group e00000b078790000 cpus 2-3 (cpu_power = 2048) group e00000b078790020 cpus 4-5 (cpu_power = 2048) group e00000b078790040 cpus 6-7 (cpu_power = 2048) group e00000b078790060 cpus 8-9 (cpu_power = 2048) group e00000b078790080 cpus 10-11 (cpu_power = 2048) group e00000b0787900a0 cpus 12-13 (cpu_power = 2048) group e00000b0787900c0 cpus 14-15 (cpu_power = 2048) group e00000b0787900e0 cpus 16-17 (cpu_power = 2048) group e00000b078790100 cpus 18-19 (cpu_power = 2048) group e00000b078790120 cpus 20-21 (cpu_power = 2048) group e00000b078790140 cpus 22-23 (cpu_power = 2048) group e00000b078790160 cpus 24-25 (cpu_power = 2048) group e00000b078790180 cpus 0-1 (cpu_power = 2048)

[ 0.764465] CPU3 attaching sched-domain:

[ 0.764468] domain 0: span 2-3 level CPU

[ 0.764471] groups: group e00000b003024f70 cpus 3 group e00000b003014f70 cpus 2

[ 0.764478] domain 1: span 0-25 level NODE

[ 0.764481] groups: group e00000b078790000 cpus 2-3 (cpu_power = 2048) group e00000b078790020 cpus 4-5 (cpu_power = 2048) group e00000b078790040 cpus 6-7 (cpu_power = 2048) group e00000b078790060 cpus 8-9 (cpu_power = 2048) group e00000b078790080 cpus 10-11 (cpu_power = 2048) group e00000b0787900a0 cpus 12-13 (cpu_power = 2048) group e00000b0787900c0 cpus 14-15 (cpu_power = 2048) group e00000b0787900e0 cpus 16-17 (cpu_power = 2048) group e00000b078790100 cpus 18-19 (cpu_power = 2048) group e00000b078790120 cpus 20-21 (cpu_power = 2048) group e00000b078790140 cpus 22-23 (cpu_power = 2048) group e00000b078790160 cpus 24-25 (cpu_power = 2048) group e00000b078790180 cpus 0-1 (cpu_power = 2048)

[ 0.764524] CPU4 attaching sched-domain:

[ 0.764527] domain 0: span 4-5 level CPU

[ 0.764530] groups: group e000013003024f70 cpus 4 group e000013003034f70 cpus 5

[ 0.764537] domain 1: span 0-25 level NODE

[ 0.764540] groups: group e000013078770000 cpus 4-5 (cpu_power = 2048) group e000013078770020 cpus 6-7 (cpu_power = 2048) group e000013078770040 cpus 8-9 (cpu_power = 2048) group e000013078770060 cpus 10-11 (cpu_power = 2048) group e000013078770080 cpus 12-13 (cpu_power = 2048) group e0000130787700a0 cpus 14-15 (cpu_power = 2048) group e0000130787700c0 cpus 16-17 (cpu_power = 2048) group e0000130787700e0 cpus 18-19 (cpu_power = 2048) group e000013078770100 cpus 20-21 (cpu_power = 2048) group e000013078770120 cpus 22-23 (cpu_power = 2048) group e000013078770140 cpus 24-25 (cpu_power = 2048) group e000013078770160 cpus 0-1 (cpu_power = 2048) group e000013078770180 cpus 2-3 (cpu_power = 2048)

[ 0.764583] CPU5 attaching sched-domain:

[ 0.764586] domain 0: span 4-5 level CPU

[ 0.764589] groups: group e000013003034f70 cpus 5 group e000013003024f70 cpus 4

[ 0.764596] domain 1: span 0-25 level NODE

[ 0.764599] groups: group e000013078770000 cpus 4-5 (cpu_power = 2048) group e000013078770020 cpus 6-7 (cpu_power = 2048) group e000013078770040 cpus 8-9 (cpu_power = 2048) group e000013078770060 cpus 10-11 (cpu_power = 2048) group e000013078770080 cpus 12-13 (cpu_power = 2048) group e0000130787700a0 cpus 14-15 (cpu_power = 2048) group e0000130787700c0 cpus 16-17 (cpu_power = 2048) group e0000130787700e0 cpus 18-19 (cpu_power = 2048) group e000013078770100 cpus 20-21 (cpu_power = 2048) group e000013078770120 cpus 22-23 (cpu_power = 2048) group e000013078770140 cpus 24-25 (cpu_power = 2048) group e000013078770160 cpus 0-1 (cpu_power = 2048) group e000013078770180 cpus 2-3 (cpu_power = 2048)

[ 0.764642] CPU6 attaching sched-domain:

[ 0.764645] domain 0: span 6-7 level CPU

[ 0.764648] groups: group e00001b003034f70 cpus 6 group e00001b003044f70 cpus 7

[ 0.764655] domain 1: span 0-25 level NODE

[ 0.764658] groups: group e00001b07800bfe0 cpus 6-7 (cpu_power = 2048) group e00001b07800bfc0 cpus 8-9 (cpu_power = 2048) group e00001b07800bfa0 cpus 10-11 (cpu_power = 2048) group e00001b07800bf80 cpus 12-13 (cpu_power = 2048) group e00001b07800bf60 cpus 14-15 (cpu_power = 2048) group e00001b07800bf40 cpus 16-17 (cpu_power = 2048) group e00001b07800bf20 cpus 18-19 (cpu_power = 2048) group e00001b07800bf00 cpus 20-21 (cpu_power = 2048) group e00001b07800bee0 cpus 22-23 (cpu_power = 2048) group e00001b07800bec0 cpus 24-25 (cpu_power = 2048) group e00001b07800bea0 cpus 0-1 (cpu_power = 2048) group e00001b07800be80 cpus 2-3 (cpu_power = 2048) group e00001b07800be60 cpus 4-5 (cpu_power = 2048)

[ 0.764700] CPU7 attaching sched-domain:

[ 0.764703] domain 0: span 6-7 level CPU

[ 0.764706] groups: group e00001b003044f70 cpus 7 group e00001b003034f70 cpus 6

[ 0.764713] domain 1: span 0-25 level NODE

[ 0.764716] groups: group e00001b07800bfe0 cpus 6-7 (cpu_power = 2048) group e00001b07800bfc0 cpus 8-9 (cpu_power = 2048) group e00001b07800bfa0 cpus 10-11 (cpu_power = 2048) group e00001b07800bf80 cpus 12-13 (cpu_power = 2048) group e00001b07800bf60 cpus 14-15 (cpu_power = 2048) group e00001b07800bf40 cpus 16-17 (cpu_power = 2048) group e00001b07800bf20 cpus 18-19 (cpu_power = 2048) group e00001b07800bf00 cpus 20-21 (cpu_power = 2048) group e00001b07800bee0 cpus 22-23 (cpu_power = 2048) group e00001b07800bec0 cpus 24-25 (cpu_power = 2048) group e00001b07800bea0 cpus 0-1 (cpu_power = 2048) group e00001b07800be80 cpus 2-3 (cpu_power = 2048) group e00001b07800be60 cpus 4-5 (cpu_power = 2048)

[ 0.764758] CPU8 attaching sched-domain:

[ 0.764761] domain 0: span 8-9 level CPU

[ 0.764764] groups: group e000023003044f70 cpus 8 group e000023003054f70 cpus 9

[ 0.764771] domain 1: span 0-25 level NODE

[ 0.764774] groups: group e0000234f7b78000 cpus 8-9 (cpu_power = 2048) group e0000234f7b78020 cpus 10-11 (cpu_power = 2048) group e0000234f7b78040 cpus 12-13 (cpu_power = 2048) group e0000234f7b78060 cpus 14-15 (cpu_power = 2048) group e0000234f7b78080 cpus 16-17 (cpu_power = 2048) group e0000234f7b780a0 cpus 18-19 (cpu_power = 2048) group e0000234f7b780c0 cpus 20-21 (cpu_power = 2048) group e0000234f7b780e0 cpus 22-23 (cpu_power = 2048) group e0000234f7b78100 cpus 24-25 (cpu_power = 2048) group e0000234f7b78120 cpus 0-1 (cpu_power = 2048) group e0000234f7b78140 cpus 2-3 (cpu_power = 2048) group e0000234f7b78160 cpus 4-5 (cpu_power = 2048) group e0000234f7b78180 cpus 6-7 (cpu_power = 2048)

[ 0.764817] CPU9 attaching sched-domain:

[ 0.764820] domain 0: span 8-9 level CPU

[ 0.764822] groups: group e000023003054f70 cpus 9 group e000023003044f70 cpus 8

[ 0.764829] domain 1: span 0-25 level NODE

[ 0.764832] groups: group e0000234f7b78000 cpus 8-9 (cpu_power = 2048) group e0000234f7b78020 cpus 10-11 (cpu_power = 2048) group e0000234f7b78040 cpus 12-13 (cpu_power = 2048) group e0000234f7b78060 cpus 14-15 (cpu_power = 2048) group e0000234f7b78080 cpus 16-17 (cpu_power = 2048) group e0000234f7b780a0 cpus 18-19 (cpu_power = 2048) group e0000234f7b780c0 cpus 20-21 (cpu_power = 2048) group e0000234f7b780e0 cpus 22-23 (cpu_power = 2048) group e0000234f7b78100 cpus 24-25 (cpu_power = 2048) group e0000234f7b78120 cpus 0-1 (cpu_power = 2048) group e0000234f7b78140 cpus 2-3 (cpu_power = 2048) group e0000234f7b78160 cpus 4-5 (cpu_power = 2048) group e0000234f7b78180 cpus 6-7 (cpu_power = 2048)

[ 0.764875] CPU10 attaching sched-domain:

[ 0.764878] domain 0: span 10-11 level CPU

[ 0.764881] groups: group e00002b003054f70 cpus 10 group e00002b003064f70 cpus 11

[ 0.764888] domain 1: span 0-25 level NODE

[ 0.764891] groups: group e00002b0f077c000 cpus 10-11 (cpu_power = 2048) group e00002b0f077c020 cpus 12-13 (cpu_power = 2048) group e00002b0f077c040 cpus 14-15 (cpu_power = 2048) group e00002b0f077c060 cpus 16-17 (cpu_power = 2048) group e00002b0f077c080 cpus 18-19 (cpu_power = 2048) group e00002b0f077c0a0 cpus 20-21 (cpu_power = 2048) group e00002b0f077c0c0 cpus 22-23 (cpu_power = 2048) group e00002b0f077c0e0 cpus 24-25 (cpu_power = 2048) group e00002b0f077c100 cpus 0-1 (cpu_power = 2048) group e00002b0f077c120 cpus 2-3 (cpu_power = 2048) group e00002b0f077c140 cpus 4-5 (cpu_power = 2048) group e00002b0f077c160 cpus 6-7 (cpu_power = 2048) group e00002b0f077c180 cpus 8-9 (cpu_power = 2048)

[ 0.764934] CPU11 attaching sched-domain:

[ 0.764937] domain 0: span 10-11 level CPU

[ 0.764940] groups: group e00002b003064f70 cpus 11 group e00002b003054f70 cpus 10

[ 0.764946] domain 1: span 0-25 level NODE

[ 0.764949] groups: group e00002b0f077c000 cpus 10-11 (cpu_power = 2048) group e00002b0f077c020 cpus 12-13 (cpu_power = 2048) group e00002b0f077c040 cpus 14-15 (cpu_power = 2048) group e00002b0f077c060 cpus 16-17 (cpu_power = 2048) group e00002b0f077c080 cpus 18-19 (cpu_power = 2048) group e00002b0f077c0a0 cpus 20-21 (cpu_power = 2048) group e00002b0f077c0c0 cpus 22-23 (cpu_power = 2048) group e00002b0f077c0e0 cpus 24-25 (cpu_power = 2048) group e00002b0f077c100 cpus 0-1 (cpu_power = 2048) group e00002b0f077c120 cpus 2-3 (cpu_power = 2048) group e00002b0f077c140 cpus 4-5 (cpu_power = 2048) group e00002b0f077c160 cpus 6-7 (cpu_power = 2048) group e00002b0f077c180 cpus 8-9 (cpu_power = 2048)

[ 0.764992] CPU12 attaching sched-domain:

[ 0.764995] domain 0: span 12-13 level CPU

[ 0.764998] groups: group e000033003064f70 cpus 12 group e000033003074f70 cpus 13

[ 0.765005] domain 1: span 0-25 level NODE

[ 0.765008] groups: group e0000330f7b78000 cpus 12-13 (cpu_power = 2048) group e0000330f7b78020 cpus 14-15 (cpu_power = 2048) group e0000330f7b78040 cpus 16-17 (cpu_power = 2048) group e0000330f7b78060 cpus 18-19 (cpu_power = 2048) group e0000330f7b78080 cpus 20-21 (cpu_power = 2048) group e0000330f7b780a0 cpus 22-23 (cpu_power = 2048) group e0000330f7b780c0 cpus 24-25 (cpu_power = 2048) group e0000330f7b780e0 cpus 0-1 (cpu_power = 2048) group e0000330f7b78100 cpus 2-3 (cpu_power = 2048) group e0000330f7b78120 cpus 4-5 (cpu_power = 2048) group e0000330f7b78140 cpus 6-7 (cpu_power = 2048) group e0000330f7b78160 cpus 8-9 (cpu_power = 2048) group e0000330f7b78180 cpus 10-11 (cpu_power = 2048)

[ 0.765051] CPU13 attaching sched-domain:

[ 0.765054] domain 0: span 12-13 level CPU

[ 0.765057] groups: group e000033003074f70 cpus 13 group e000033003064f70 cpus 12

[ 0.765064] domain 1: span 0-25 level NODE

[ 0.765067] groups: group e0000330f7b78000 cpus 12-13 (cpu_power = 2048) group e0000330f7b78020 cpus 14-15 (cpu_power = 2048) group e0000330f7b78040 cpus 16-17 (cpu_power = 2048) group e0000330f7b78060 cpus 18-19 (cpu_power = 2048) group e0000330f7b78080 cpus 20-21 (cpu_power = 2048) group e0000330f7b780a0 cpus 22-23 (cpu_power = 2048) group e0000330f7b780c0 cpus 24-25 (cpu_power = 2048) group e0000330f7b780e0 cpus 0-1 (cpu_power = 2048) group e0000330f7b78100 cpus 2-3 (cpu_power = 2048) group e0000330f7b78120 cpus 4-5 (cpu_power = 2048) group e0000330f7b78140 cpus 6-7 (cpu_power = 2048) group e0000330f7b78160 cpus 8-9 (cpu_power = 2048) group e0000330f7b78180 cpus 10-11 (cpu_power = 2048)

[ 0.765110] CPU14 attaching sched-domain:

[ 0.765113] domain 0: span 14-15 level CPU

[ 0.765116] groups: group e00003b003074f70 cpus 14 group e00003b003084f70 cpus 15

[ 0.765122] domain 1: span 0-25 level NODE

[ 0.765125] groups: group e00003b0f0800000 cpus 14-15 (cpu_power = 2048) group e00003b0f0800020 cpus 16-17 (cpu_power = 2048) group e00003b0f0800040 cpus 18-19 (cpu_power = 2048) group e00003b0f0800060 cpus 20-21 (cpu_power = 2048) group e00003b0f0800080 cpus 22-23 (cpu_power = 2048) group e00003b0f08000a0 cpus 24-25 (cpu_power = 2048) group e00003b0f08000c0 cpus 0-1 (cpu_power = 2048) group e00003b0f08000e0 cpus 2-3 (cpu_power = 2048) group e00003b0f0800100 cpus 4-5 (cpu_power = 2048) group e00003b0f0800120 cpus 6-7 (cpu_power = 2048) group e00003b0f0800140 cpus 8-9 (cpu_power = 2048) group e00003b0f0800160 cpus 10-11 (cpu_power = 2048) group e00003b0f0800180 cpus 12-13 (cpu_power = 2048)

[ 0.765168] CPU15 attaching sched-domain:

[ 0.765171] domain 0: span 14-15 level CPU

[ 0.765174] groups: group e00003b003084f70 cpus 15 group e00003b003074f70 cpus 14

[ 0.765181] domain 1: span 0-25 level NODE

[ 0.765184] groups: group e00003b0f0800000 cpus 14-15 (cpu_power = 2048) group e00003b0f0800020 cpus 16-17 (cpu_power = 2048) group e00003b0f0800040 cpus 18-19 (cpu_power = 2048) group e00003b0f0800060 cpus 20-21 (cpu_power = 2048) group e00003b0f0800080 cpus 22-23 (cpu_power = 2048) group e00003b0f08000a0 cpus 24-25 (cpu_power = 2048) group e00003b0f08000c0 cpus 0-1 (cpu_power = 2048) group e00003b0f08000e0 cpus 2-3 (cpu_power = 2048) group e00003b0f0800100 cpus 4-5 (cpu_power = 2048) group e00003b0f0800120 cpus 6-7 (cpu_power = 2048) group e00003b0f0800140 cpus 8-9 (cpu_power = 2048) group e00003b0f0800160 cpus 10-11 (cpu_power = 2048) group e00003b0f0800180 cpus 12-13 (cpu_power = 2048)

[ 0.765227] CPU16 attaching sched-domain:

[ 0.765230] domain 0: span 16-17 level CPU

[ 0.765233] groups: group e000043003084f70 cpus 16 group e000043003094f70 cpus 17

[ 0.765240] domain 1: span 0-25 level NODE

[ 0.765243] groups: group e0000430f0774000 cpus 16-17 (cpu_power = 2048) group e0000430f0774020 cpus 18-19 (cpu_power = 2048) group e0000430f0774040 cpus 20-21 (cpu_power = 2048) group e0000430f0774060 cpus 22-23 (cpu_power = 2048) group e0000430f0774080 cpus 24-25 (cpu_power = 2048) group e0000430f07740a0 cpus 0-1 (cpu_power = 2048) group e0000430f07740c0 cpus 2-3 (cpu_power = 2048) group e0000430f07740e0 cpus 4-5 (cpu_power = 2048) group e0000430f0774100 cpus 6-7 (cpu_power = 2048) group e0000430f0774120 cpus 8-9 (cpu_power = 2048) group e0000430f0774140 cpus 10-11 (cpu_power = 2048) group e0000430f0774160 cpus 12-13 (cpu_power = 2048) group e0000430f0774180 cpus 14-15 (cpu_power = 2048)

[ 0.765286] CPU17 attaching sched-domain:

[ 0.765289] domain 0: span 16-17 level CPU

[ 0.765292] groups: group e000043003094f70 cpus 17 group e000043003084f70 cpus 16

[ 0.765298] domain 1: span 0-25 level NODE

[ 0.765301] groups: group e0000430f0774000 cpus 16-17 (cpu_power = 2048) group e0000430f0774020 cpus 18-19 (cpu_power = 2048) group e0000430f0774040 cpus 20-21 (cpu_power = 2048) group e0000430f0774060 cpus 22-23 (cpu_power = 2048) group e0000430f0774080 cpus 24-25 (cpu_power = 2048) group e0000430f07740a0 cpus 0-1 (cpu_power = 2048) group e0000430f07740c0 cpus 2-3 (cpu_power = 2048) group e0000430f07740e0 cpus 4-5 (cpu_power = 2048) group e0000430f0774100 cpus 6-7 (cpu_power = 2048) group e0000430f0774120 cpus 8-9 (cpu_power = 2048) group e0000430f0774140 cpus 10-11 (cpu_power = 2048) group e0000430f0774160 cpus 12-13 (cpu_power = 2048) group e0000430f0774180 cpus 14-15 (cpu_power = 2048)

[ 0.765344] CPU18 attaching sched-domain:

[ 0.765347] domain 0: span 18-19 level CPU

[ 0.765350] groups: group e00004b003094f70 cpus 18 group e00004b0030a4f70 cpus 19

[ 0.765357] domain 1: span 0-25 level NODE

[ 0.765360] groups: group e00004b0f0770000 cpus 18-19 (cpu_power = 2048) group e00004b0f0770020 cpus 20-21 (cpu_power = 2048) group e00004b0f0770040 cpus 22-23 (cpu_power = 2048) group e00004b0f0770060 cpus 24-25 (cpu_power = 2048) group e00004b0f0770080 cpus 0-1 (cpu_power = 2048) group e00004b0f07700a0 cpus 2-3 (cpu_power = 2048) group e00004b0f07700c0 cpus 4-5 (cpu_power = 2048) group e00004b0f07700e0 cpus 6-7 (cpu_power = 2048) group e00004b0f0770100 cpus 8-9 (cpu_power = 2048) group e00004b0f0770120 cpus 10-11 (cpu_power = 2048) group e00004b0f0770140 cpus 12-13 (cpu_power = 2048) group e00004b0f0770160 cpus 14-15 (cpu_power = 2048) group e00004b0f0770180 cpus 16-17 (cpu_power = 2048)

[ 0.765403] CPU19 attaching sched-domain:

[ 0.765406] domain 0: span 18-19 level CPU

[ 0.765409] groups: group e00004b0030a4f70 cpus 19 group e00004b003094f70 cpus 18

[ 0.765416] domain 1: span 0-25 level NODE

[ 0.765419] groups: group e00004b0f0770000 cpus 18-19 (cpu_power = 2048) group e00004b0f0770020 cpus 20-21 (cpu_power = 2048) group e00004b0f0770040 cpus 22-23 (cpu_power = 2048) group e00004b0f0770060 cpus 24-25 (cpu_power = 2048) group e00004b0f0770080 cpus 0-1 (cpu_power = 2048) group e00004b0f07700a0 cpus 2-3 (cpu_power = 2048) group e00004b0f07700c0 cpus 4-5 (cpu_power = 2048) group e00004b0f07700e0 cpus 6-7 (cpu_power = 2048) group e00004b0f0770100 cpus 8-9 (cpu_power = 2048) group e00004b0f0770120 cpus 10-11 (cpu_power = 2048) group e00004b0f0770140 cpus 12-13 (cpu_power = 2048) group e00004b0f0770160 cpus 14-15 (cpu_power = 2048) group e00004b0f0770180 cpus 16-17 (cpu_power = 2048)

[ 0.765462] CPU20 attaching sched-domain:

[ 0.765465] domain 0: span 20-21 level CPU

[ 0.765468] groups: group e0000530030a4f70 cpus 20 group e0000530030b4f70 cpus 21

[ 0.765474] domain 1: span 0-25 level NODE

[ 0.765477] groups: group e0000530f0774000 cpus 20-21 (cpu_power = 2048) group e0000530f0774020 cpus 22-23 (cpu_power = 2048) group e0000530f0774040 cpus 24-25 (cpu_power = 2048) group e0000530f0774060 cpus 0-1 (cpu_power = 2048) group e0000530f0774080 cpus 2-3 (cpu_power = 2048) group e0000530f07740a0 cpus 4-5 (cpu_power = 2048) group e0000530f07740c0 cpus 6-7 (cpu_power = 2048) group e0000530f07740e0 cpus 8-9 (cpu_power = 2048) group e0000530f0774100 cpus 10-11 (cpu_power = 2048) group e0000530f0774120 cpus 12-13 (cpu_power = 2048) group e0000530f0774140 cpus 14-15 (cpu_power = 2048) group e0000530f0774160 cpus 16-17 (cpu_power = 2048) group e0000530f0774180 cpus 18-19 (cpu_power = 2048)

[ 0.765520] CPU21 attaching sched-domain:

[ 0.765523] domain 0: span 20-21 level CPU

[ 0.765526] groups: group e0000530030b4f70 cpus 21 group e0000530030a4f70 cpus 20

[ 0.765533] domain 1: span 0-25 level NODE

[ 0.765536] groups: group e0000530f0774000 cpus 20-21 (cpu_power = 2048) group e0000530f0774020 cpus 22-23 (cpu_power = 2048) group e0000530f0774040 cpus 24-25 (cpu_power = 2048) group e0000530f0774060 cpus 0-1 (cpu_power = 2048) group e0000530f0774080 cpus 2-3 (cpu_power = 2048) group e0000530f07740a0 cpus 4-5 (cpu_power = 2048) group e0000530f07740c0 cpus 6-7 (cpu_power = 2048) group e0000530f07740e0 cpus 8-9 (cpu_power = 2048) group e0000530f0774100 cpus 10-11 (cpu_power = 2048) group e0000530f0774120 cpus 12-13 (cpu_power = 2048) group e0000530f0774140 cpus 14-15 (cpu_power = 2048) group e0000530f0774160 cpus 16-17 (cpu_power = 2048) group e0000530f0774180 cpus 18-19 (cpu_power = 2048)

[ 0.765579] CPU22 attaching sched-domain:

[ 0.765582] domain 0: span 22-23 level CPU

[ 0.765585] groups: group e00005b0030b4f70 cpus 22 group e00005b0030c4f70 cpus 23

[ 0.765592] domain 1: span 0-25 level NODE

[ 0.765595] groups: group e00005b078770000 cpus 22-23 (cpu_power = 2048) group e00005b078770020 cpus 24-25 (cpu_power = 2048) group e00005b078770040 cpus 0-1 (cpu_power = 2048) group e00005b078770060 cpus 2-3 (cpu_power = 2048) group e00005b078770080 cpus 4-5 (cpu_power = 2048) group e00005b0787700a0 cpus 6-7 (cpu_power = 2048) group e00005b0787700c0 cpus 8-9 (cpu_power = 2048) group e00005b0787700e0 cpus 10-11 (cpu_power = 2048) group e00005b078770100 cpus 12-13 (cpu_power = 2048) group e00005b078770120 cpus 14-15 (cpu_power = 2048) group e00005b078770140 cpus 16-17 (cpu_power = 2048) group e00005b078770160 cpus 18-19 (cpu_power = 2048) group e00005b078770180 cpus 20-21 (cpu_power = 2048)

[ 0.765638] CPU23 attaching sched-domain:

[ 0.765641] domain 0: span 22-23 level CPU

[ 0.765644] groups: group e00005b0030c4f70 cpus 23 group e00005b0030b4f70 cpus 22

[ 0.765651] domain 1: span 0-25 level NODE

[ 0.765654] groups: group e00005b078770000 cpus 22-23 (cpu_power = 2048) group e00005b078770020 cpus 24-25 (cpu_power = 2048) group e00005b078770040 cpus 0-1 (cpu_power = 2048) group e00005b078770060 cpus 2-3 (cpu_power = 2048) group e00005b078770080 cpus 4-5 (cpu_power = 2048) group e00005b0787700a0 cpus 6-7 (cpu_power = 2048) group e00005b0787700c0 cpus 8-9 (cpu_power = 2048) group e00005b0787700e0 cpus 10-11 (cpu_power = 2048) group e00005b078770100 cpus 12-13 (cpu_power = 2048) group e00005b078770120 cpus 14-15 (cpu_power = 2048) group e00005b078770140 cpus 16-17 (cpu_power = 2048) group e00005b078770160 cpus 18-19 (cpu_power = 2048) group e00005b078770180 cpus 20-21 (cpu_power = 2048)

[ 0.765697] CPU24 attaching sched-domain:

[ 0.765700] domain 0: span 24-25 level CPU

[ 0.765703] groups: group e0000630030c4f70 cpus 24 group e0000630030d4f70 cpus 25

[ 0.765710] domain 1: span 0-25 level NODE

[ 0.765713] groups: group e0000630f5134000 cpus 24-25 (cpu_power = 2048) group e0000630f5134020 cpus 0-1 (cpu_power = 2048) group e0000630f5134040 cpus 2-3 (cpu_power = 2048) group e0000630f5134060 cpus 4-5 (cpu_power = 2048) group e0000630f5134080 cpus 6-7 (cpu_power = 2048) group e0000630f51340a0 cpus 8-9 (cpu_power = 2048) group e0000630f51340c0 cpus 10-11 (cpu_power = 2048) group e0000630f51340e0 cpus 12-13 (cpu_power = 2048) group e0000630f5134100 cpus 14-15 (cpu_power = 2048) group e0000630f5134120 cpus 16-17 (cpu_power = 2048) group e0000630f5134140 cpus 18-19 (cpu_power = 2048) group e0000630f5134160 cpus 20-21 (cpu_power = 2048) group e0000630f5134180 cpus 22-23 (cpu_power = 2048)

[ 0.765757] CPU25 attaching sched-domain:

[ 0.765760] domain 0: span 24-25 level CPU

[ 0.765763] groups: group e0000630030d4f70 cpus 25 group e0000630030c4f70 cpus 24

[ 0.765770] domain 1: span 0-25 level NODE

[ 0.765773] groups: group e0000630f5134000 cpus 24-25 (cpu_power = 2048) group e0000630f5134020 cpus 0-1 (cpu_power = 2048) group e0000630f5134040 cpus 2-3 (cpu_power = 2048) group e0000630f5134060 cpus 4-5 (cpu_power = 2048) group e0000630f5134080 cpus 6-7 (cpu_power = 2048) group e0000630f51340a0 cpus 8-9 (cpu_power = 2048) group e0000630f51340c0 cpus 10-11 (cpu_power = 2048) group e0000630f51340e0 cpus 12-13 (cpu_power = 2048) group e0000630f5134100 cpus 14-15 (cpu_power = 2048) group e0000630f5134120 cpus 16-17 (cpu_power = 2048) group e0000630f5134140 cpus 18-19 (cpu_power = 2048) group e0000630f5134160 cpus 20-21 (cpu_power = 2048) group e0000630f5134180 cpus 22-23 (cpu_power = 2048)

[ 0.772462] devtmpfs: initialized

[ 0.787888] DMI not present or invalid.

[ 0.788896] regulator: core version 0.5

[ 0.788896] NET: Registered protocol family 16

[ 0.792155] ACPI: bus type pci registered

[ 0.793001] ACPI DSDT OEM Rev 0x20101

[ 0.822113] bio: create slab <bio-0> at 0

[ 0.825785] ACPI: SCI (ACPI GSI 52) not registered

[ 0.828016] ACPI: EC: Look up EC in DSDT

[ 0.828785] ACPI: Interpreter enabled

[ 0.832003] ACPI: (supports S0)

[ 0.844092] ACPI: Using platform specific model for interrupt routing

[ 0.846647] ACPI: No dock devices found.

[ 0.847371] ACPI: PCI Root Bridge [P000] (0002:00)

[ 0.880610] pci 0002:00:01.0: reg 10 64bit mmio: [0x700000-0x70ffff]

[ 0.880683] pci 0002:00:01.0: reg 18 64bit mmio: [0x710000-0x71ffff]

[ 0.881118] pci 0002:00:01.0: PME# supported from D3hot D3cold

[ 0.896021] pci 0002:00:01.0: PME# disabled

[ 0.897476] pci 0002:00:01.1: reg 10 64bit mmio: [0x720000-0x72ffff]

[ 0.897549] pci 0002:00:01.1: reg 18 64bit mmio: [0x730000-0x73ffff]

[ 0.897978] pci 0002:00:01.1: PME# supported from D3hot D3cold

[ 0.900022] pci 0002:00:01.1: PME# disabled

[ 0.916267] ACPI: PCI Interrupt Routing Table [\_SB_.H000.P000.D010._PRT]

[ 0.916286] ACPI: PCI Interrupt Routing Table [\_SB_.H000.P000.D011._PRT]

[ 0.916427] ACPI: PCI Root Bridge [P001] (0001:00)

[ 0.917480] pci 0001:00:01.0: reg 10 32bit mmio: [0x200000-0x2fffff]

[ 0.918262] pci 0001:00:03.0: reg 10 64bit mmio: [0x700000-0x700fff]

[ 0.920204] pci 0001:00:04.0: reg 10 64bit mmio: [0x710000-0x71ffff]

[ 0.920717] pci 0001:00:04.0: PME# supported from D3hot

[ 0.936021] pci 0001:00:04.0: PME# disabled

[ 0.937010] ACPI: PCI Interrupt Routing Table [\_SB_.H000.P001.D010._PRT]

[ 0.937026] ACPI: PCI Interrupt Routing Table [\_SB_.H000.P001.D030._PRT]

[ 0.937040] ACPI: PCI Interrupt Routing Table [\_SB_.H000.P001.D040._PRT]

[ 0.937249] ACPI: PCI Root Bridge [P000] (0012:00)

[ 0.952716] pci 0012:00:01.0: reg 10 64bit mmio: [0x700000-0x70ffff]

[ 0.953321] pci 0012:00:01.0: PME# supported from D3hot D3cold

[ 0.968026] pci 0012:00:01.0: PME# disabled

[ 0.969573] pci 0012:00:02.0: reg 10 io port: [0x1000-0x10ff]

[ 0.969660] pci 0012:00:02.0: reg 14 64bit mmio: [0x720000-0x73ffff]

[ 0.969747] pci 0012:00:02.0: reg 1c 64bit mmio: [0x740000-0x75ffff]

[ 0.969835] pci 0012:00:02.0: reg 30 32bit mmio pref: [0x800000-0x8fffff]

[ 0.970231] pci 0012:00:02.0: supports D1 D2

[ 0.970971] pci 0012:00:02.1: reg 10 io port: [0x1100-0x11ff]

[ 0.971059] pci 0012:00:02.1: reg 14 64bit mmio: [0x760000-0x77ffff]

[ 0.971146] pci 0012:00:02.1: reg 1c 64bit mmio: [0x780000-0x79ffff]

[ 0.971234] pci 0012:00:02.1: reg 30 32bit mmio pref: [0x900000-0x9fffff]

[ 0.971630] pci 0012:00:02.1: supports D1 D2

[ 0.971989] ACPI: PCI Interrupt Routing Table [\_SB_.H004.P000.D010._PRT]

[ 0.972000] ACPI: PCI Interrupt Routing Table [\_SB_.H004.P000.D020._PRT]

[ 0.972000] ACPI: PCI Interrupt Routing Table [\_SB_.H004.P000.D021._PRT]

[ 0.972000] ACPI: PCI Root Bridge [P001] (0011:00)

[ 0.976276] pci 0011:00:01.0: reg 10 32bit mmio: [0x200000-0x2fffff]

[ 0.977238] pci 0011:00:03.0: reg 10 64bit mmio: [0x700000-0x700fff]

[ 0.978663] pci 0011:00:04.0: reg 10 64bit mmio: [0x710000-0x71ffff]

[ 0.979293] pci 0011:00:04.0: PME# supported from D3hot

[ 0.988025] pci 0011:00:04.0: PME# disabled

[ 0.989159] ACPI: PCI Interrupt Routing Table [\_SB_.H004.P001.D010._PRT]

[ 0.989174] ACPI: PCI Interrupt Routing Table [\_SB_.H004.P001.D030._PRT]

[ 0.989189] ACPI: PCI Interrupt Routing Table [\_SB_.H004.P001.D040._PRT]

[ 0.989481] vgaarb: loaded

[ 0.992106] Switching to clocksource sn2_rtc

[ 1.023997] pnp: PnP ACPI init

[ 1.023997] ACPI: bus type pnp registered

[ 1.032502] pnp: PnP ACPI: found 4 devices

[ 1.033245] ACPI: ACPI bus type pnp unregistered

[ 1.049474] NET: Registered protocol family 2

[ 1.050834] IP route cache hash table entries: 524288 (order: 8, 4194304 bytes)

[ 1.070330] TCP established hash table entries: 524288 (order: 9, 8388608 bytes)

[ 1.105084] TCP bind hash table entries: 65536 (order: 6, 1048576 bytes)

[ 1.108525] TCP: Hash tables configured (established 524288 bind 65536)

[ 1.116043] TCP reno registered

[ 1.119180] NET: Registered protocol family 1

[ 1.120038] Unpacking initramfs...

[ 1.838449] Freeing initrd memory: 17872kB freed

[ 1.839883] perfmon: version 2.0 IRQ 238

[ 1.840521] perfmon: Itanium 2 PMU detected, 16 PMCs, 18 PMDs, 4 counters (47 bits)

[ 1.861831] perfmon: added sampling format default_format

[ 1.868702] perfmon_default_smpl: default_format v2.0 registered

[ 3.522304] audit: initializing netlink socket (disabled)

[ 3.523286] type=2000 audit(1321744276.522:1): initialized

[ 3.530708] HugeTLB registered 256 MB page size, pre-allocated 0 pages

[ 3.547092] VFS: Disk quotas dquot_6.5.2

[ 3.548169] Dquot-cache hash table entries: 2048 (order 0, 16384 bytes)

[ 3.552912] msgmni has been set to 32768

[ 3.557249] alg: No test for stdrng (krng)

[ 3.571219] Block layer SCSI generic (bsg) driver version 0.4 loaded (major 253)

[ 3.587906] io scheduler noop registered

[ 3.588548] io scheduler anticipatory registered

[ 3.589432] io scheduler deadline registered

[ 3.590426] io scheduler cfq registered (default)

[ 3.626776] input: Power Button as /devices/LNXSYSTM:00/LNXPWRBN:00/input/input0

[ 3.640896] ACPI: Power Button [PWRF]

[ 3.641669] input: Sleep Button as /devices/LNXSYSTM:00/LNXSLPBN:00/input/input1

[ 3.642884] ACPI: Sleep Button [SLPF]

[ 3.692040] IRQ 233/system controller events: IRQF_DISABLED is not guaranteed on shared IRQs

[ 3.694160] Linux agpgart interface v0.103

[ 3.695153] Serial: 8250/16550 driver, 4 ports, IRQ sharing enabled

[ 3.706345] sn_console: Console driver init

[ 3.707182] ttySG0 at I/O 0x0 (irq = 0) is a SGI SN L1

[ 3.723441] IRQ 233/SAL console driver: IRQF_DISABLED is not guaranteed on shared IRQs

[ 3.725198] mice: PS/2 mouse device common for all mice

[ 3.756312] rtc-efi rtc-efi: rtc core: registered rtc-efi as rtc0

[ 3.767083] TCP cubic registered

[ 3.767600] NET: Registered protocol family 17

[ 3.774698] registered taskstats version 1

[ 3.779644] rtc-efi rtc-efi: setting system clock to 2011-11-19 23:11:16 UTC (1321744276)

[ 3.792220] Freeing unused kernel memory: 736kB freed

[ 3.830140] udev[282]: starting version 164

[ 3.868602] SCSI subsystem initialized

[ 3.869707] IOC4 0001:00:01.0: PCI INT A -> GSI 60 (level, low) -> IRQ 60

[ 3.877495] IOC4 0001:00:01.0: IO10 card detected.

[ 3.904747] libata version 3.00 loaded.

[ 3.915873] tg3.c:v3.116 (December 3, 2010)

[ 3.916777] tg3 0002:00:01.0: PCI INT A -> GSI 63 (level, low) -> IRQ 63

[ 3.926555] Fusion MPT base driver 3.04.12

[ 3.927311] Copyright (c) 1999-2008 LSI Corporation

[ 3.948586] Fusion MPT SPI Host driver 3.04.12

[ 3.949657] mptspi 0012:00:02.0: PCI INT A -> GSI 69 (level, low) -> IRQ 69

[ 3.953834] tg3 0002:00:01.0: eth0: Tigon3 [partno(9210292) rev 2003] (PCIX:100MHz:64-bit) MAC address 00:e0:ed:08:48:dc

[ 3.953840] tg3 0002:00:01.0: eth0: attached PHY is 5704 (10/100/1000Base-T Ethernet) (WireSpeed[1])

[ 3.953845] tg3 0002:00:01.0: eth0: RXcsums[1] LinkChgREG[0] MIirq[0] ASF[0] TSOcap[1]

[ 3.953849] tg3 0002:00:01.0: eth0: dma_rwctrl[769f4000] dma_mask[64-bit]

[ 3.953945] tg3 0002:00:01.1: PCI INT B -> GSI 64 (level, low) -> IRQ 64

[ 3.989503] tg3 0002:00:01.1: eth1: Tigon3 [partno(9210292) rev 2003] (PCIX:100MHz:64-bit) MAC address 00:e0:ed:08:48:dd

[ 3.989509] tg3 0002:00:01.1: eth1: attached PHY is 5704 (10/100/1000Base-T Ethernet) (WireSpeed[1])

[ 3.989514] tg3 0002:00:01.1: eth1: RXcsums[1] LinkChgREG[0] MIirq[0] ASF[0] TSOcap[1]

[ 3.989517] tg3 0002:00:01.1: eth1: dma_rwctrl[769f4000] dma_mask[64-bit]

[ 4.094365] mptbase: ioc0: Initiating bringup

[ 4.135905] IOC4 0001:00:01.0: PCI clock is 15 ns.

[ 4.135934] IOC4 loading sgiioc4 submodule

[ 4.136762] sata_vsc 0001:00:03.0: version 2.3

[ 4.136849] IOC4 0011:00:01.0: PCI INT A -> GSI 65 (level, low) -> IRQ 65

[ 4.136946] sata_vsc 0001:00:03.0: PCI INT A -> GSI 61 (level, low) -> IRQ 61

[ 4.137924] scsi0 : sata_vsc

[ 4.138379] scsi1 : sata_vsc

[ 4.138550] scsi2 : sata_vsc

[ 4.138744] scsi3 : sata_vsc

[ 4.138834] ata1: SATA max UDMA/133 mmio m4096@0x8c0700000 port 0x8c0700200 irq 71

[ 4.138842] ata2: SATA max UDMA/133 mmio m4096@0x8c0700000 port 0x8c0700400 irq 71

[ 4.138850] ata3: SATA max UDMA/133 mmio m4096@0x8c0700000 port 0x8c0700600 irq 71

[ 4.138858] ata4: SATA max UDMA/133 mmio m4096@0x8c0700000 port 0x8c0700800 irq 71

[ 4.139014] tg3 0001:00:04.0: PCI INT A -> GSI 62 (level, low) -> IRQ 62

[ 4.336694] IOC4 0011:00:01.0: IO10 card detected.

[ 4.459440] ata1: SATA link up 1.5 Gbps (SStatus 113 SControl 300)

[ 4.476946] ata1.00: ATA-6: HDS722580VLSA80, V32OA60A, max UDMA/100

[ 4.478049] ata1.00: 160836480 sectors, multi 0: LBA48

[ 4.504916] ata1.00: configured for UDMA/100

[ 4.507246] scsi 0:0:0:0: Direct-Access ATA HDS722580VLSA80 V32O PQ: 0 ANSI: 5

[ 4.577738] ioc0: LSI53C1030 B2: Capabilities={Initiator,Target}

[ 4.595902] IOC4 0011:00:01.0: PCI clock is 15 ns.

[ 4.595907] IOC4 loading sgiioc4 submodule

[ 4.596863] sata_vsc 0011:00:03.0: PCI INT A -> GSI 66 (level, low) -> IRQ 66

[ 4.603732] scsi4 : sata_vsc

[ 4.604482] scsi5 : sata_vsc

[ 4.620917] scsi6 : sata_vsc

[ 4.621703] scsi7 : sata_vsc

[ 4.634768] ata5: SATA max UDMA/133 mmio m4096@0x208c0700000 port 0x208c0700200 irq 72

[ 4.636223] ata6: SATA max UDMA/133 mmio m4096@0x208c0700000 port 0x208c0700400 irq 72

[ 4.637599] ata7: SATA max UDMA/133 mmio m4096@0x208c0700000 port 0x208c0700600 irq 72

[ 4.673993] Uniform Multi-Platform E-IDE driver

[ 4.675032] ata8: SATA max UDMA/133 mmio m4096@0x208c0700000 port 0x208c0700800 irq 72

[ 4.831396] ata2: SATA link up 1.5 Gbps (SStatus 113 SControl 300)

[ 4.848864] ata2.00: ATA-6: HDS722580VLSA80, V32OA6MA, max UDMA/100

[ 4.850018] ata2.00: 160836480 sectors, multi 0: LBA48

[ 4.876873] ata2.00: configured for UDMA/100

[ 4.879225] scsi 1:0:0:0: Direct-Access ATA HDS722580VLSA80 V32O PQ: 0 ANSI: 5

[ 4.894941] sd 0:0:0:0: [sda] 160836480 512-byte logical blocks: (82.3 GB/76.6 GiB)

[ 4.895360] sd 1:0:0:0: [sdb] 160836480 512-byte logical blocks: (82.3 GB/76.6 GiB)

[ 4.895466] sd 1:0:0:0: [sdb] Write Protect is off

[ 4.895470] sd 1:0:0:0: [sdb] Mode Sense: 00 3a 00 00

[ 4.895504] sd 1:0:0:0: [sdb] Write cache: disabled, read cache: enabled, doesn't support DPO or FUA

[ 4.895830] sdb:

[ 4.933225] sd 0:0:0:0: [sda] Write Protect is off

[ 4.934684] sdb1 sdb2 sdb3

[ 4.954363] sd 0:0:0:0: [sda] Mode Sense: 00 3a 00 00

[ 4.954405] sd 0:0:0:0: [sda] Write cache: enabled, read cache: enabled, doesn't support DPO or FUA

[ 4.954680] sd 1:0:0:0: [sdb] Attached SCSI disk

[ 4.957114] sda:

[ 4.996361] ata5: SATA link down (SStatus 0 SControl 300)

[ 5.003643] sda1 sda2 sda3

[ 5.005698] sd 0:0:0:0: [sda] Attached SCSI disk

[ 5.072646] scsi8 : ioc0: LSI53C1030 B2, FwRev=01032710h, Ports=1, MaxQ=255, IRQ=69

[ 5.203316] ata3: SATA link down (SStatus 0 SControl 300)

[ 5.519484] tg3 0001:00:04.0: eth2: Tigon3 [partno(030-1771-000) rev 0105] (PCI:66MHz:64-bit) MAC address 08:00:69:13:f9:4d

[ 5.521341] tg3 0001:00:04.0: eth2: attached PHY is 5701 (10/100/1000Base-T Ethernet) (WireSpeed[1])

[ 5.522912] tg3 0001:00:04.0: eth2: RXcsums[1] LinkChgREG[0] MIirq[0] ASF[0] TSOcap[0]

[ 5.523287] ata4: SATA link down (SStatus 0 SControl 300)

[ 5.552907] tg3 0001:00:04.0: eth2: dma_rwctrl[76ff3f0f] dma_mask[64-bit]

[ 5.711143] tg3 0012:00:01.0: PCI INT A -> GSI 68 (level, low) -> IRQ 68

[ 5.843250] ata6: SATA link down (SStatus 0 SControl 300)

[ 6.163221] ata7: SATA link down (SStatus 0 SControl 300)

[ 6.483194] ata8: SATA link down (SStatus 0 SControl 300)

[ 6.486959] SGIIOC4: IDE controller at PCI slot 0001:00:01.0, revision 83

[ 6.492540] ide0: MMIO-DMA

[ 6.493206] Probing IDE interface ide0...

[ 7.091169] tg3 0012:00:01.0: eth3: Tigon3 [partno(9210289) rev 0105] (PCIX:133MHz:64-bit) MAC address 08:00:69:14:76:83

[ 7.092947] tg3 0012:00:01.0: eth3: attached PHY is 5701 (10/100/1000Base-T Ethernet) (WireSpeed[1])

[ 7.094709] tg3 0012:00:01.0: eth3: RXcsums[1] LinkChgREG[0] MIirq[0] ASF[0] TSOcap[0]

[ 7.123991] tg3 0012:00:01.0: eth3: dma_rwctrl[76db1b0f] dma_mask[64-bit]

[ 7.125287] tg3 0011:00:04.0: PCI INT A -> GSI 67 (level, low) -> IRQ 67

[ 7.125310] mptspi 0012:00:02.1: PCI INT B -> GSI 70 (level, low) -> IRQ 70

[ 7.126423] mptbase: ioc1: Initiating bringup

[ 7.229500] hda: MATSHITADVD-ROM SR-8177, ATAPI CD/DVD-ROM drive

[ 7.565107] hda: MWDMA2 mode selected

[ 7.566071] ide0 at 0xc00000080f200100-0xc00000080f20011c,0xc00000080f200120 on irq 60

[ 7.574816] SGIIOC4: IDE controller at PCI slot 0011:00:01.0, revision 83

[ 7.583684] ide1: MMIO-DMA

[ 7.583821] ide-cd driver 5.00

[ 7.584992] Probing IDE interface ide1...

[ 7.585448] ide-cd: hda: ATAPI 61X DVD-ROM drive

[ 7.605524] ioc1: LSI53C1030 B2: Capabilities={Initiator,Target}

[ 7.613330] , 256kB Cache

[ 7.613943] Uniform CD-ROM driver Revision: 3.20

[ 8.096401] scsi9 : ioc1: LSI53C1030 B2, FwRev=01032710h, Ports=1, MaxQ=255, IRQ=70

[ 8.321582] hdc: MATSHITADVD-ROM SR-8178, ATAPI CD/DVD-ROM drive

[ 8.531058] tg3 0011:00:04.0: eth4: Tigon3 [partno(030-1771-000) rev 0105] (PCI:66MHz:64-bit) MAC address 08:00:69:14:01:e7

[ 8.532927] tg3 0011:00:04.0: eth4: attached PHY is 5701 (10/100/1000Base-T Ethernet) (WireSpeed[1])

[ 8.534524] tg3 0011:00:04.0: eth4: RXcsums[1] LinkChgREG[0] MIirq[0] ASF[0] TSOcap[0]

[ 8.563903] tg3 0011:00:04.0: eth4: dma_rwctrl[76ff3f0f] dma_mask[64-bit]

[ 8.661176] hdc: MWDMA2 mode selected

[ 8.662065] ide1 at 0xc00002080f200100-0xc00002080f20011c,0xc00002080f200120 on irq 65

[ 8.672094] ide-cd: hdc: ATAPI 24X DVD-ROM drive, 256kB Cache

[ 8.901483] md: raid1 personality registered for level 1

[ 8.967207] md: md0 stopped.

[ 8.969127] md: bind<sdb2>

[ 8.969832] md: bind<sda2>

[ 8.979161] raid1: raid set md0 active with 2 out of 2 mirrors

[ 8.991436] md0: detected capacity change from 0 to 8911781888

[ 8.993859] md0: unknown partition table

[ 9.034225] md: md1 stopped.

[ 9.064466] md: bind<sdb3>

[ 9.065208] md: bind<sda3>

[ 9.068740] raid1: raid set md1 active with 2 out of 2 mirrors

[ 9.073656] md1: detected capacity change from 0 to 72909914112

[ 9.091070] md1: unknown partition table

[ 9.309054] SGI XFS with ACLs, security attributes, realtime, large block/inode numbers, no debug enabled

[ 9.322269] SGI XFS Quota Management subsystem

[ 9.391121] XFS mounting filesystem md1

[ 9.625952] Starting XFS recovery on filesystem: md1 (logdev: internal)

[ 9.870938] Ending XFS recovery on filesystem: md1 (logdev: internal)

[ 12.053927] udev[651]: starting version 164

[ 12.626989] udev[678]: renamed network interface eth1 to eth1-eth2

[ 12.627704] udev[682]: renamed network interface eth4 to eth4-eth3

[ 12.628448] udev[673]: renamed network interface eth0 to eth1

[ 12.629295] udev[718]: renamed network interface eth3 to eth3-eth4

[ 12.630309] udev[669]: renamed network interface eth2 to eth0

[ 12.682027] udev[682]: renamed network interface eth4-eth3 to eth3

[ 12.684131] udev[718]: renamed network interface eth3-eth4 to eth4

[ 12.717560] udev[678]: renamed network interface eth1-eth2 to eth2

[ 13.940954] Adding 8702880k swap on /dev/md0. Priority:-1 extents:1 across:8702880k

[ 14.332224] loop: module loaded

[ 16.234308] fuse init (API version 7.13)

[ 17.645404] RPC: Registered udp transport module.

[ 17.646271] RPC: Registered tcp transport module.

[ 17.647161] RPC: Registered tcp NFSv4.1 backchannel transport module.

[ 17.879273] Installing knfsd (copyright (C) 1996 [email protected]).

[ 18.041619] NET: Registered protocol family 10

[ 18.047666] ADDRCONF(NETDEV_UP): eth0: link is not ready

[ 18.056842] tg3 0001:00:04.0: eth0: Link is up at 100 Mbps, full duplex

[ 18.059333] tg3 0001:00:04.0: eth0: Flow control is off for TX and off for RX

[ 18.081046] ADDRCONF(NETDEV_CHANGE): eth0: link becomes ready

[ 18.107988] svc: failed to register lockdv1 RPC service (errno 97).

[ 18.112074] NFSD: Using /var/lib/nfs/v4recovery as the NFSv4 state recovery directory

[ 18.118593] NFSD: starting 90-second grace period

[ 19.157583] NetworkManager(1549): unaligned access to 0x600000000002621c, ip=0x20000000007d9a70

[ 19.159086] NetworkManager(1549): unaligned access to 0x6000000000026224, ip=0x20000000007d9aa0

[ 19.160628] NetworkManager(1549): unaligned access to 0x600000000002622c, ip=0x20000000007d9ac0

[ 19.190258] NetworkManager(1549): unaligned access to 0x600000000002621c, ip=0x20000000007d9a70

[ 19.199728] NetworkManager(1549): unaligned access to 0x6000000000026224, ip=0x20000000007d9aa0

[ 19.810913] tg3 0002:00:01.0: firmware: requesting tigon/tg3_tso.bin

[ 19.969488] ADDRCONF(NETDEV_UP): eth1: link is not ready

[ 19.990359] tg3 0002:00:01.1: firmware: requesting tigon/tg3_tso.bin

[ 20.089943] ADDRCONF(NETDEV_UP): eth2: link is not ready

[ 20.372900] ADDRCONF(NETDEV_UP): eth3: link is not ready

[ 20.655776] ADDRCONF(NETDEV_UP): eth4: link is not ready

[ 23.938160] Bluetooth: Core ver 2.15

[ 23.939650] NET: Registered protocol family 31

[ 23.940484] Bluetooth: HCI device and connection manager initialized

[ 23.946998] Bluetooth: HCI socket layer initialized

[ 24.167962] lp: driver loaded but no devices found

[ 24.207608] ppdev: user-space parallel port driver

[ 24.249033] Bluetooth: L2CAP ver 2.14

[ 24.249819] Bluetooth: L2CAP socket layer initialized

[ 24.279871] Bluetooth: RFCOMM TTY layer initialized

[ 24.280785] Bluetooth: RFCOMM socket layer initialized

[ 24.281718] Bluetooth: RFCOMM ver 1.11

[ 24.915432] Bluetooth: BNEP (Ethernet Emulation) ver 1.3

[ 24.916337] Bluetooth: BNEP filters: protocol multicast

[ 24.989511] Bridge firewalling registered

[ 24.993664] NetworkManager(1549): unaligned access to 0x6000000000074b1c, ip=0x20000000007d9a70

[ 24.998595] NetworkManager(1549): unaligned access to 0x6000000000074b24, ip=0x20000000007d9aa0

[ 25.000104] NetworkManager(1549): unaligned access to 0x6000000000074b2c, ip=0x20000000007d9ac0

[ 25.119557] Bluetooth: SCO (Voice Link) ver 0.6

[ 25.120431] Bluetooth: SCO socket layer initialized

[ 28.292710] eth0: no IPv6 routers present

[ 1638.475707] lp: driver loaded but no devices found

[ 1638.523242] ide-gd driver 1.18

[ 1638.583026] st: Version 20081215, fixed bufsize 32768, s/g segs 256

[ 1640.354648] sd 0:0:0:0: Attached scsi generic sg0 type 0

[ 1640.356246] sd 1:0:0:0: Attached scsi generic sg1 type 0

[ 1651.433832] lp: driver loaded but no devices found

winterstar:~#